Dark

Light

Emergency Server Moves, Automation,

So, I’m having a bad server day. It’s my fault, to a large extent. Earlier this year I…

Week 15 – Outstanding in our field

I had a lovely empire event. It had nice weather, nice people, and things that I wanted to…

apt-get dist-upgrade

The release of a new Debian version is one of those Deep Thought moments. The great machinery has…

Week Twelve: May the… sixth be… something something

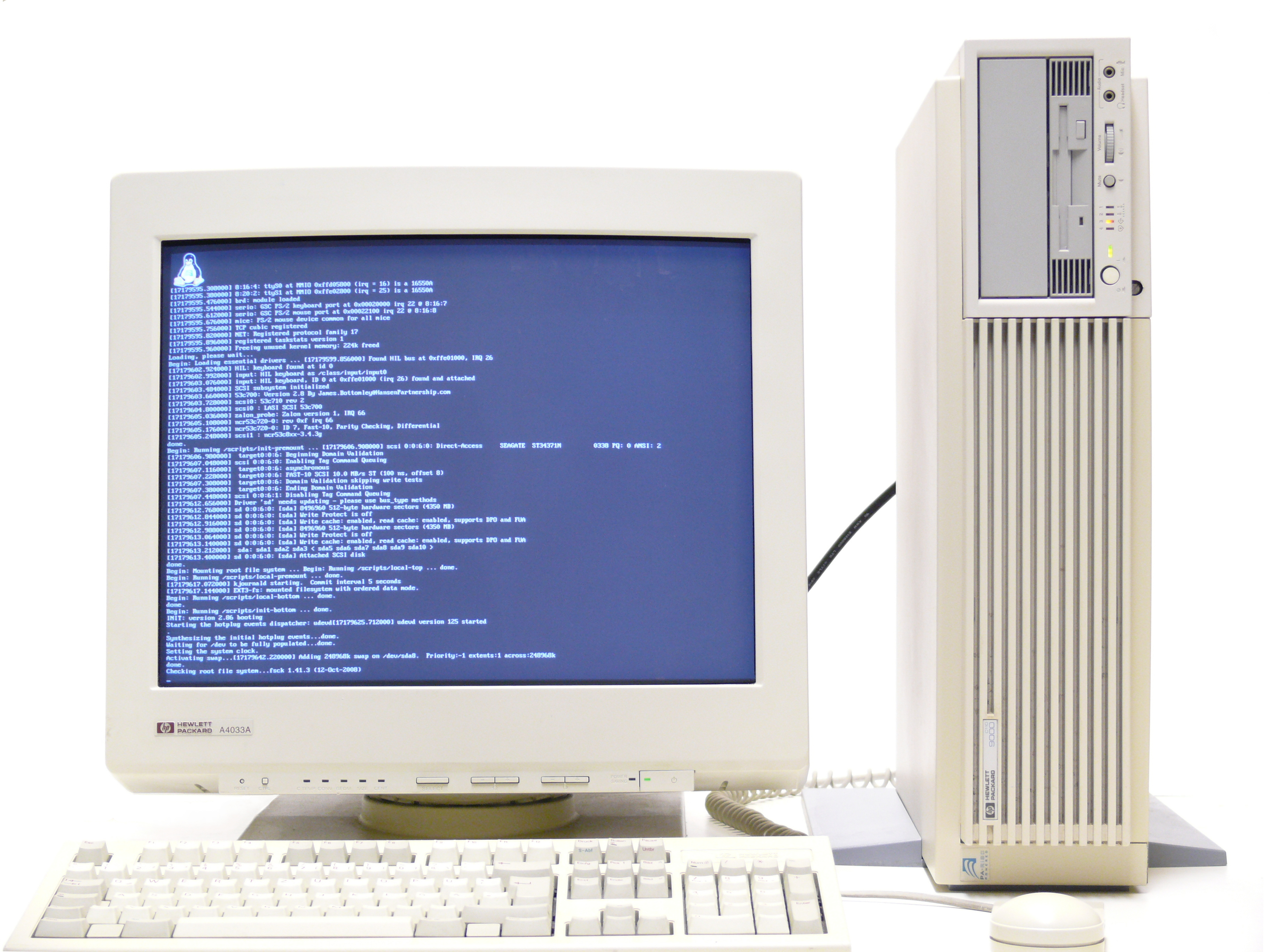

My major success story this week has been to utterly screw up my desktop computer. Over the last…

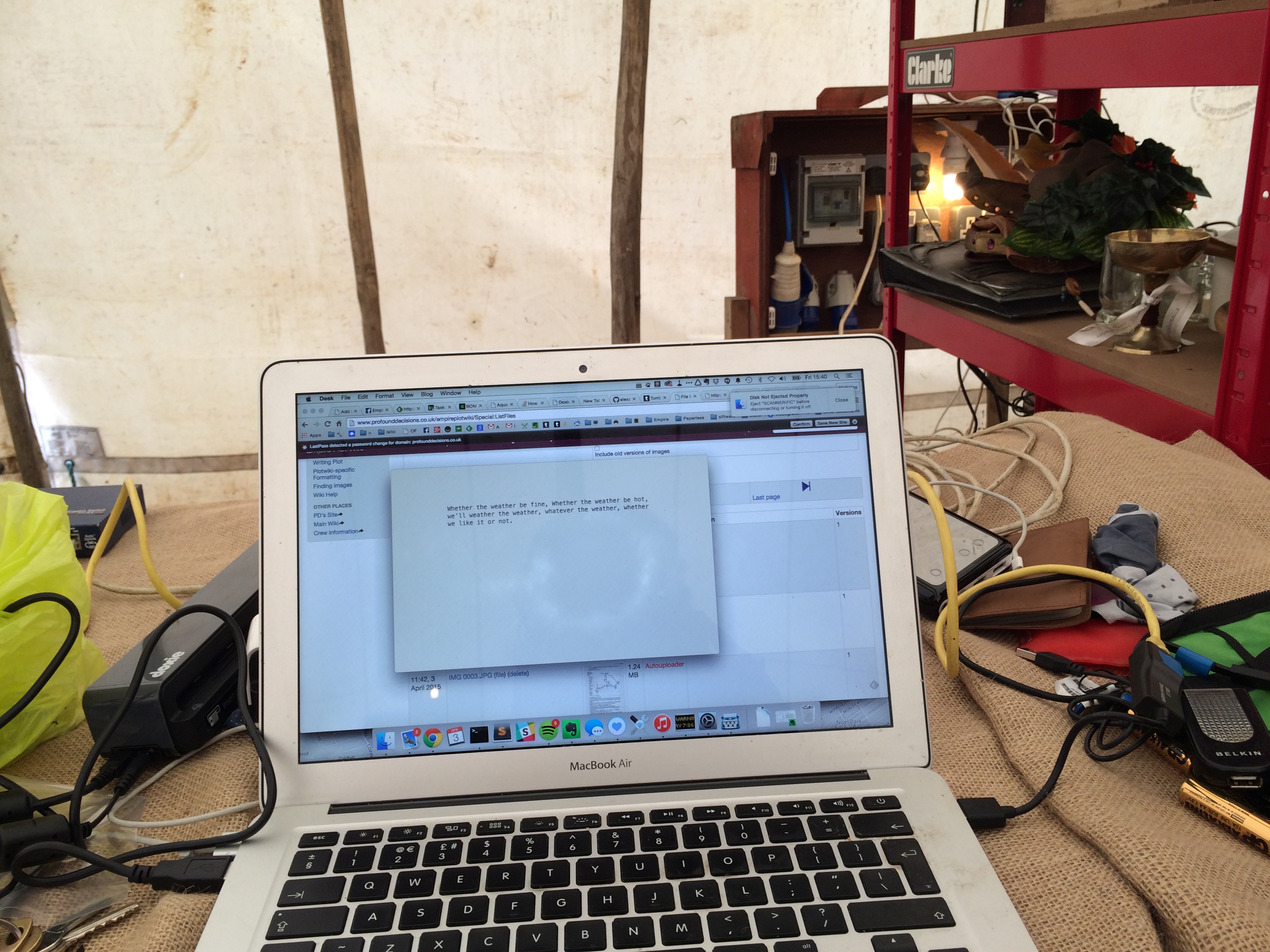

Sysadmin in a Field, Episode one: The tyranny of little bits of paper

For LARP events, including most recent Empire, PD relies on quite a bit of technology. With all the…

My Terribly Organised Life III:B – Technical Development

Code starts in a text editor. Your text editor might be a full IDE, custom built for your…

Windows 7: How To Automatically Backup Your PuTTY connections

Go to: Control Panel Administrative Tools Task Scheduler Create Basic Task (In the bar on the right) Name:…

Backups

You should back up your data. You should periodically review this backup system to make sure you can…

To remove a host that denyhosts has banned

Denyhosts is a utility that automatically bans IPs who attempt to ssh in to your server and get…