Dark

Light

Unix Shortcuts: p

A thing I rely on in my daily life, bit haven’t really ever documented. It’s a bash function…

Emergency Server Moves, Automation,

So, I’m having a bad server day. It’s my fault, to a large extent. Earlier this year I…

Fear and go seek

The common refrain for people advancing the cause that says that encryption should have government back-doors is that…

Ripping TV Yarns

I’m in the process of ripping some boxsets of DVDs to Plex, and I thought I should probably…

Week 17 – There are lights in the ground, where the lights in the sky have fallen

Busy fortnight. I’ve got a new address. Istic.Networks ltd has a new trading address, since we’ve joined the…

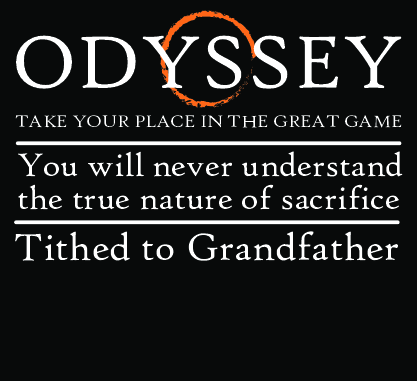

Week 15 – Outstanding in our field

I had a lovely empire event. It had nice weather, nice people, and things that I wanted to…

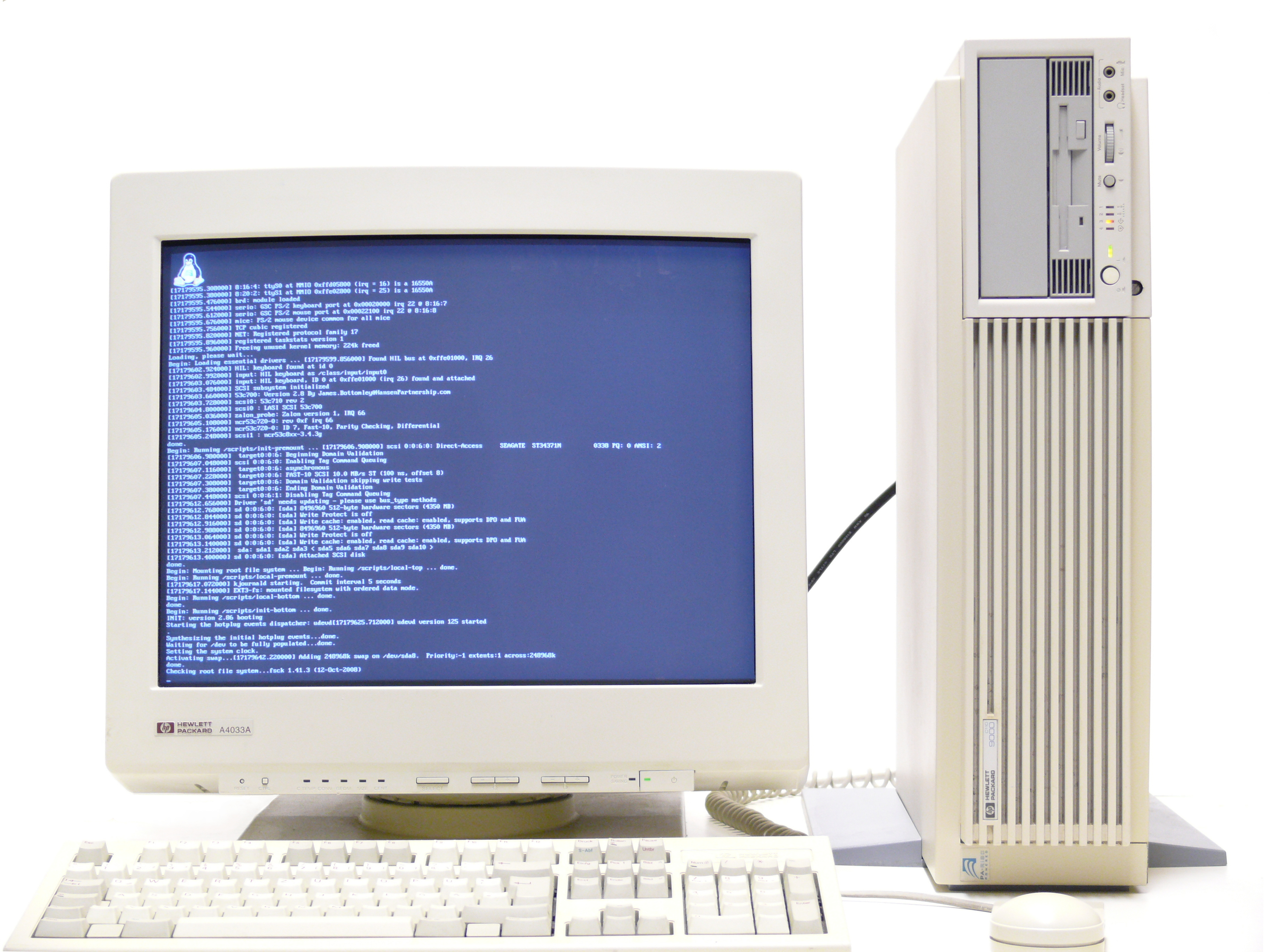

apt-get dist-upgrade

The release of a new Debian version is one of those Deep Thought moments. The great machinery has…

Week Nine – it’s better than bad, it’s good

Quiet work week, so we’ll skip that. Decided that I’d had enough of print statements, and moved both…

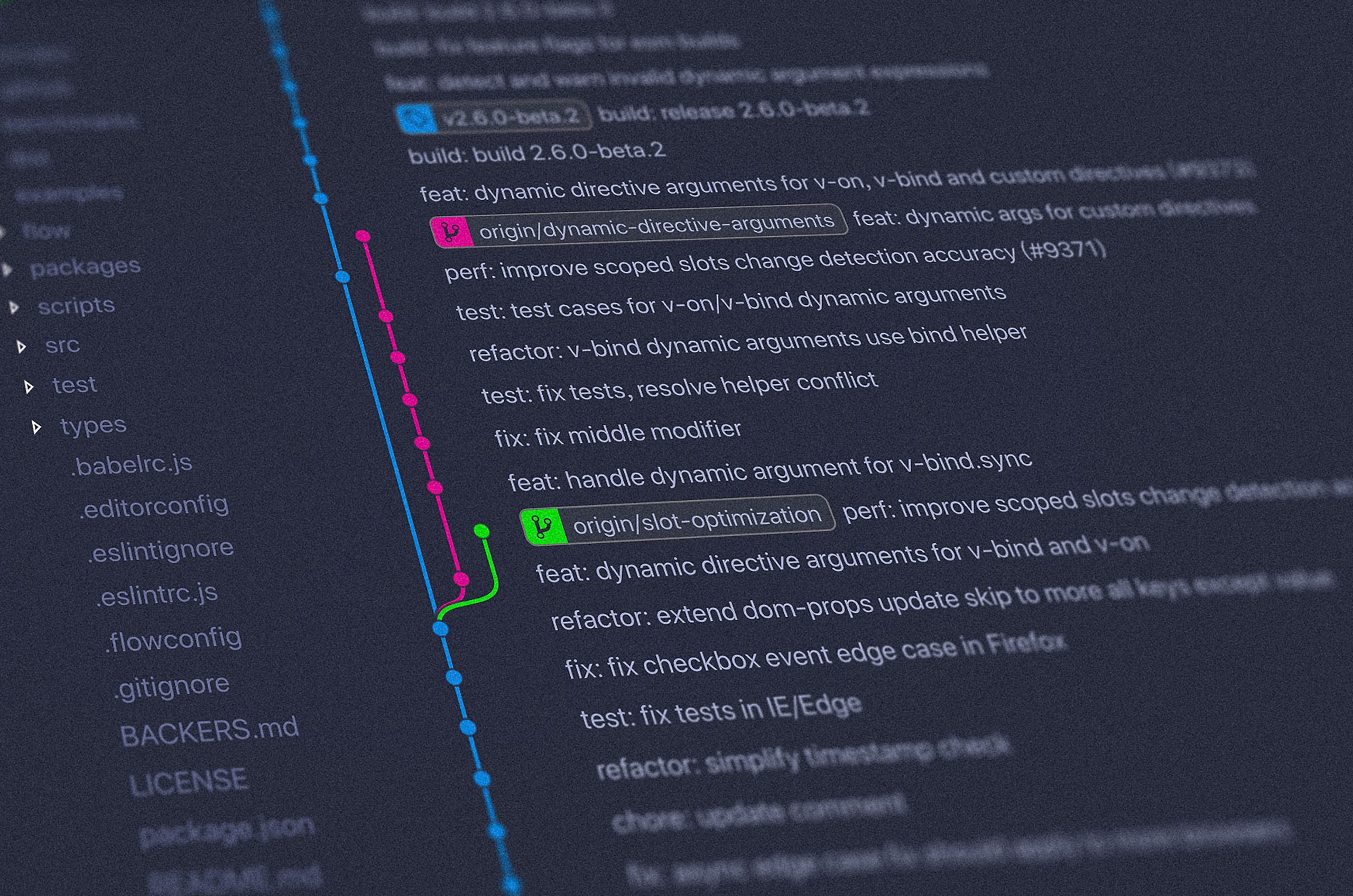

My Terribly Organised Life III:B – Technical Development

Code starts in a text editor. Your text editor might be a full IDE, custom built for your…

Year of the Linux Desktop

So, I’m developing Piracy Inc in Ruby on Rails version 3. Because newness is obviously better, I’m also…